High-Performance Message Searching in Health Connect

The Problem

Have you ever tried to do a search in Message Viewer on a busy interface and had the query time out? This can become quite a problem as the amount of data increases. For context, the instance of Health Connect I am working with does roughly 155 million Message Headers per day with 21 day message retention. To try and help with search performance, we extended the built-in SearchTable with commonly used fields in hopes that indexing these fields would result in faster query times. Despite this, we still couldn't get some of these queries to finish at all.

More Info Defining a Search Table Class.

For those of us working as HL7 integrators, we know that troubleshooting and responding to issues on a day-to-day basis is a huge part of our role. Quickly identifying and resolving these issues is critical to ensuring that we are maintaining the steady and accurate flow of data. Due to the poor search performance, we would have to gather very detailed information about each specific issue to find examples in Health Connect. Without a very narrow time frame (within a few minutes), we often were unable to get Message Viewer search to return any results before timing out. This caused a significant delay both in determining what the actual problem was and resolving it moving forward.

The Solution

This was not acceptable, so we had to find a solution in order to best serve our customers. Enter WRC.

We created a ticket, and I am happy to report a fix was identified. This fix involved going through our custom SearchTable fields and identifying which fields were not unique enough to warrant being treated as an index by the Message Viewer search.

For example, in our environment, MSH.4 (or FacilityID) is used to denote which physical hospital location the HL7 message is associated with. While this field is important to have on the SearchTable for ease of filtering, many messages come through each day for each facility. This means that it is inefficient to use this field as an index in the Message Viewer search. Counter to this would be a field like PID.18 (or Patient Account Number). The number of messages associated with each account number is very small, so using this field as an index greatly increases the speed of the search.

Adding the Unselective parameter to the appropriate items tells message search which ones to treat as indexes. This in essence modifies the SQL used to pull the messages. Below you will find the difference in queries, based on fields being used as index or not, and how you can use these queries to determine which fields should be Unselective.

Index vs NoIndex Queries

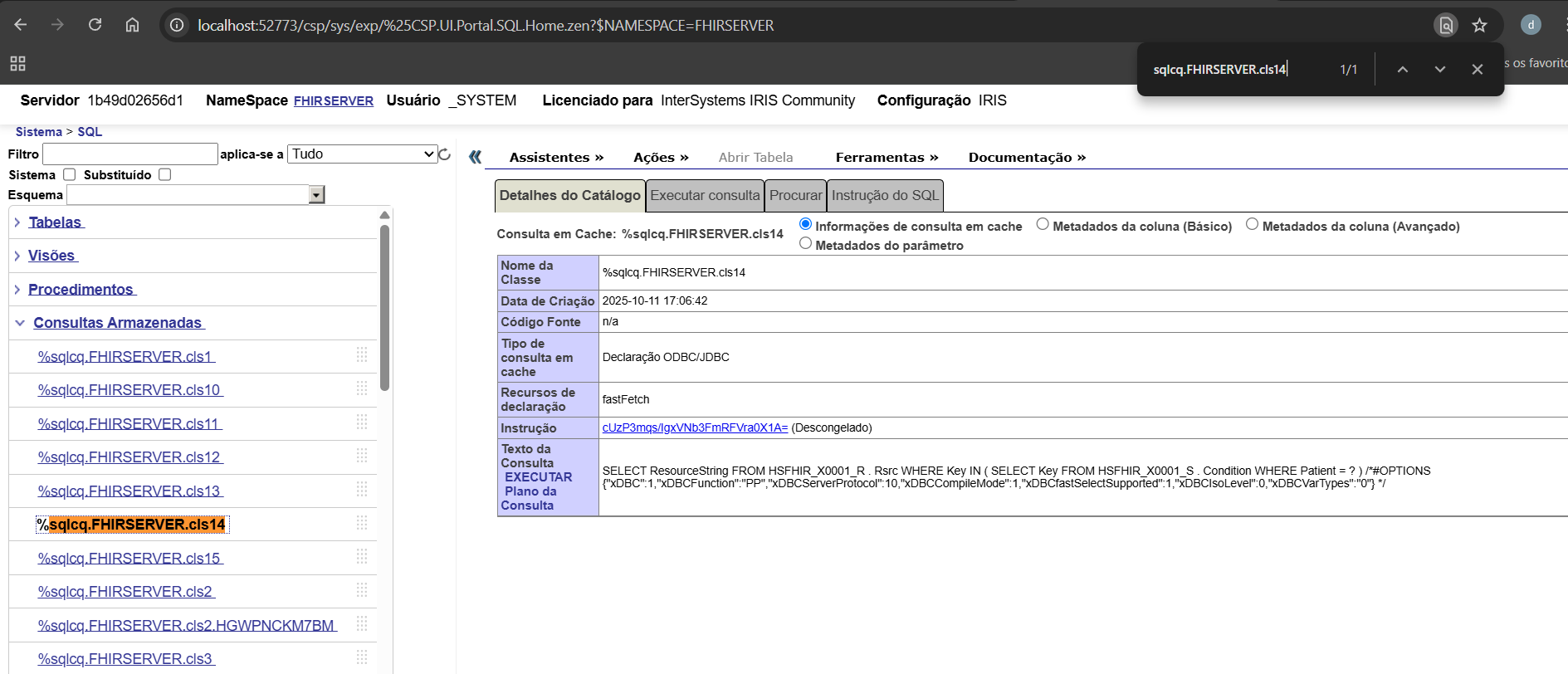

Unselective="false" (Indexed) SQL Query Plan

This query is looping over the SearchTable values and, for each row, cross-referencing the MessageHeader table. For a value that is unique and doesn’t have many messages associated with it (i.e. Patient Account Number), this is more efficient.

Unselective="true" (%NOINDEX) SQL Query Plan

This query is looping over the MessageHeader table and, for each row, cross-referencing the SearchTable values. For a value that has many results associated with it (i.e. FacilityID), this method is faster to return the results.

How to Identify Problem Fields

The best way I have found to identify which fields need to be marked as Unselective is with Show Query. Create a separate search using each field in Message Viewer (adding the SearchTable field via Add Criterion) then click Show Query to show you the actual SQL being used by Message Viewer to pull the messages based on the filters selected.

Our first example is using a field from the SearchTable that does not have the Unselected parameter added. Notice the EnsLib_HL7.SearchTable.PropId = 19 and EnsLib_HL7.SearchTable.PropValue = '2009036'. This indicates the SearchTable field added as a filter and what value is being checked. Keep in mind that the ProdId will be unique to each search table field and may change from environment to environment.

How to Add Show Query to Message Viewer

If you don’t have the Show Query button enabled in Message Viewer, you can set the following Global in your given namespace.

set ^Ens.Debug("UtilEnsMessages","sql")=1

Viewing the SQL Query Used by the Message Viewer

SQL - Unselective=“false”

SELECT TOP 100

head.ID As ID, {fn RIGHT(%EXTERNAL(head.TimeCreated),999 )} As TimeCreated,

head.SessionId As Session, head.Status As Status,

CASE head.IsError

WHEN 1

THEN 'Error' ELSE 'OK' END As Error, head.SourceConfigName As Source,

head.TargetConfigName As Target, head.SourceConfigName, head.TargetConfigName,

head.MessageBodyClassName As BodyClassname,

(SELECT LIST(PropValue)

FROM EnsLib_HL7.SearchTable

WHERE (head.MessageBodyId = DocId) And PropId=19) As SchTbl_FacilityID,

EnsLib_HL7.SearchTable.PropId As SchTbl_PropId

FROM Ens.MessageHeader head, EnsLib_HL7.SearchTable

WHERE (((head.SourceConfigName = 'component_name' OR head.TargetConfigName = 'component_name'))

AND head.MessageBodyClassName=(('EnsLib.HL7.Message'))

AND (head.MessageBodyId = EnsLib_HL7.SearchTable.DocId)

AND EnsLib_HL7.SearchTable.PropId = 19 AND EnsLib_HL7.SearchTable.PropValue = '2009036')

ORDER BY head.ID Desc

Next, take that query into SQL and manually modify it to add %NOINDEX. This is what is telling the query to not treat this value as an index.

SQL - Unselective=”true” - %NOINDEX

SELECT TOP 100

head.ID As ID, {fn RIGHT(%EXTERNAL(head.TimeCreated),999 )} As TimeCreated,

head.SessionId As Session, head.Status As Status,

CASE head.IsError

WHEN 1

THEN 'Error' ELSE 'OK' END As Error, head.SourceConfigName As Source,

head.TargetConfigName As Target, head.SourceConfigName, head.TargetConfigName,

head.MessageBodyClassName As BodyClassname,

(SELECT LIST(PropValue)

FROM EnsLib_HL7.SearchTable

WHERE (head.MessageBodyId = DocId) And PropId=19) As SchTbl_FacilityID,

EnsLib_HL7.SearchTable.PropId As SchTbl_PropId

FROM Ens.MessageHeader head, EnsLib_HL7.SearchTable

WHERE (((head.SourceConfigName = 'component_name' OR head.TargetConfigName = 'component_name'))

AND head.MessageBodyClassName='EnsLib.HL7.Message'

AND (head.MessageBodyId = EnsLib_HL7.SearchTable.DocId)

AND EnsLib_HL7.SearchTable.PropId = 19 AND %NOINDEX EnsLib_HL7.SearchTable.PropValue = (('2009036')))

ORDER BY head.ID Desc

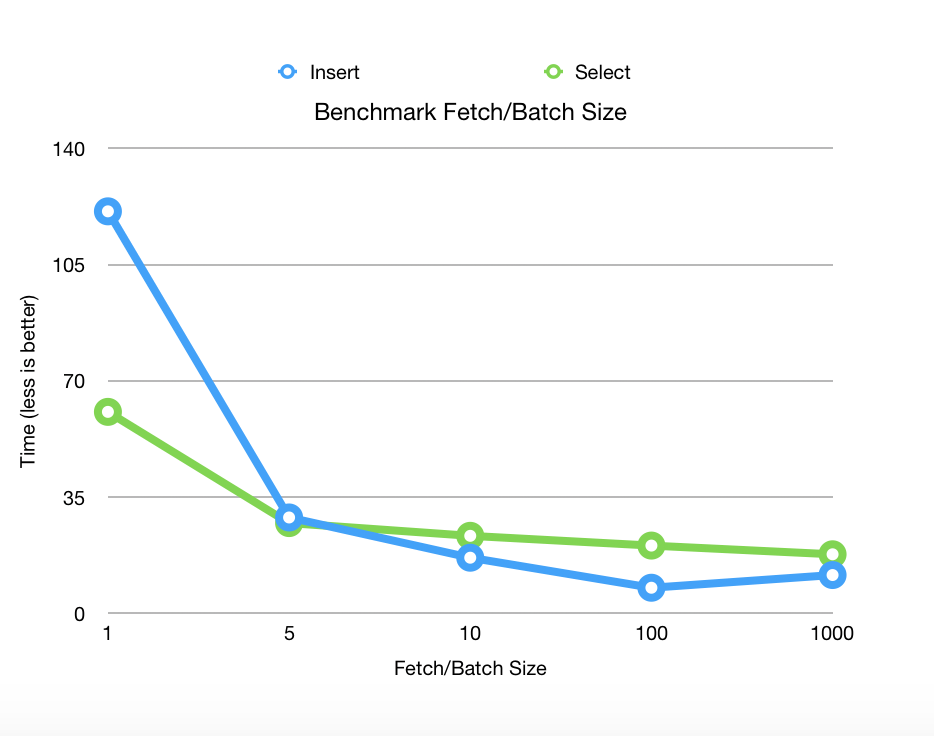

If there is a significant difference in the amount of time needed to return the first and second queries, then you have found a field that should be modified. In our case, we went from queries timing out after a few minutes to an almost instantaneous return.

Applying the Fix - Modifying Your Code

Once you have identified which fields need to be fixed, you can add the Unselective="true" to each affected Item in your custom SearchTable class. See the below example.

Custom SearchTable Class

/// Custom HL7 Search Table adds additional fields to index.

Class CustomClasses.SearchTable.CustomSearchTable Extends EnsLib.HL7.SearchTable

{

XData SearchSpec [ XMLNamespace = "http://www.intersystems.com/EnsSearchTable" ]

{

<Items>

// Increase performance by setting Unselective="true" on fields that are not highly unique.

// This essentially tells Message Search to not use an index on these fields.

// facility ID in MSH.4

<Item DocType="" PropName="FacilityID" Unselective="true"> [MSH:4] </Item>

// Event Reason in EVN.4

<Item DocType="" PropName="EventReason" Unselective="true"> [EVN:4] </Item>

// Patient Account (Add PV1.19 to prebuilt PID.18 search)

<Item DocType="" PropName="PatientAcct"> [PV1:19] </Item>

// Document type

<Item DocType="" PropName="DocumentType" Unselective="true"> [TXA:2] </Item>

// Placer Order ID

<Item DocType="" PropName="PlacerOrderID"> [OBR:2.1] </Item>

// Filler Order ID

<Item DocType="" PropName="FillerOrderID"> [OBR:3.1] </Item>

// Universal Service ID

<Item DocType="" PropName="ServiceID" Unselective="true"> [OBR:4.1] </Item>

// Universal Service ID

<Item DocType="" PropName="ProcedureName" Unselective="true"> [OBR:4.2] </Item>

// Diagnostic Service Section

<Item DocType="" PropName="ServiceSectID" Unselective="true"> [OBR:24] </Item>

// Appointment ID

<Item DocType="" PropName="AppointmentID"> [SCH:2] </Item>

// Provider Fields

<Item DocType="" PropName="ProviderNameMFN"> [STF:3()] </Item>

<Item DocType="" PropName="ProviderIDsMFN"> [MFE:4().1] </Item>

<Item DocType="" PropName="ProviderIDsMFN"> [STF:1().1] </Item>

<Item DocType="" PropName="ProviderIDsMFN"> [PRA:6().1] </Item>

</Items>

}

Storage Default

{

<Type>%Storage.Persistent</Type>

}

}

Summary

Quick message searching is vital to day-to-day integration operations. By utilizing the Unselective property, it is now possible to maintain this functionality, despite an ever-growing database. With this quick and easy-to-implement change, you will be back on track to confidently providing service and troubleshooting issues in your Health Connect environment.