Hi Community,

This article describes the small ZPM module global-archiver.

The goal is to move a part of a global from a database to another database.

Why this package?

A typical use case is read-only data sequentially added to your database that you can never delete.

For example:

- User log access to patient medical data.

- Medical documents versioning.

Depending on the intensive usage of your application, these data could highly increase your database size.

To reduce the backup time, it could be interesting to move these data to a database dedicated to the archive and make a backup of this database only after an archive process.

How It Works?

global-archiver copy a global using the Merge command and the global database level reference.

The process needs only the global name, the last identifier, and the archive database name.

Archive database could be a remote database, the syntax ^["/db/dir/","server"]GlobalName is also supported.

After the copy, a global mapping is automatically set up with the related subscript.

See the copy method here

Let's do a simple test

ZPM Installation

zpm "install global-archiver"

Generate sample data:

Do ##class(lscalese.globalarchiver.sample.DataLog).GenerateData(10000)

Now we copy the data with a DateTime older than 30 days:

Set lastId = ##class(lscalese.globalarchiver.sample.DataLog).GetLastId(30)

Set Global = $Name(^lscalese.globalarcCA13.DataLogD)

Set sc = ##class(lscalese.globalarchiver.Copier).Copy(Global, lastId, "ARCHIVE")

Our data are copied, and we can delete archived data from the source database.

On a live system, it's strongly recommended to have a backup before cleaning.

Set sc = ##class(lscalese.globalarchiver.Cleaner).DeleteArchivedData(Global,"ARCHIVE")

Now, open the global mapping page on the management portal. You should see a mapping with a subscript for the global lscalese.globalarcCA13.DataLogD.

You can see the data using the global explorer in the management portal, select database instead of a namespace, and show the global lscalese.globalarcCA13.DataLogD in database USER and ARCHIVE.

Great, the data are moved and the benefits of global mapping avoid any changes to your application code.

Advanced usage - Mirroring and ECP

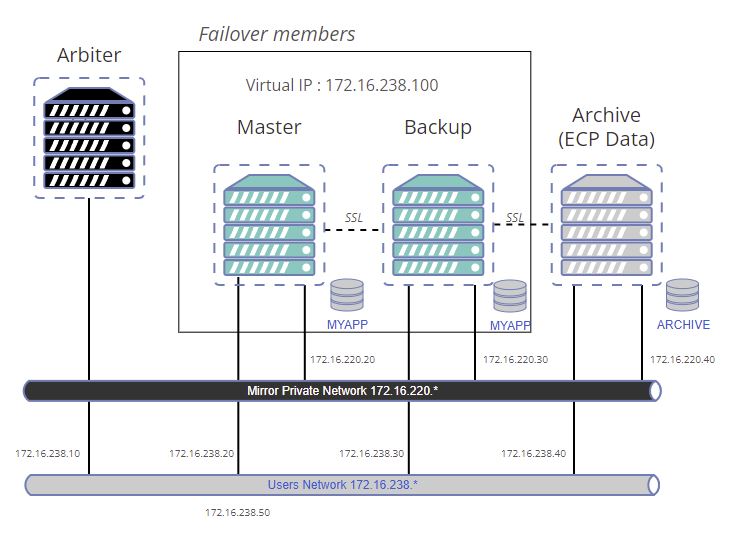

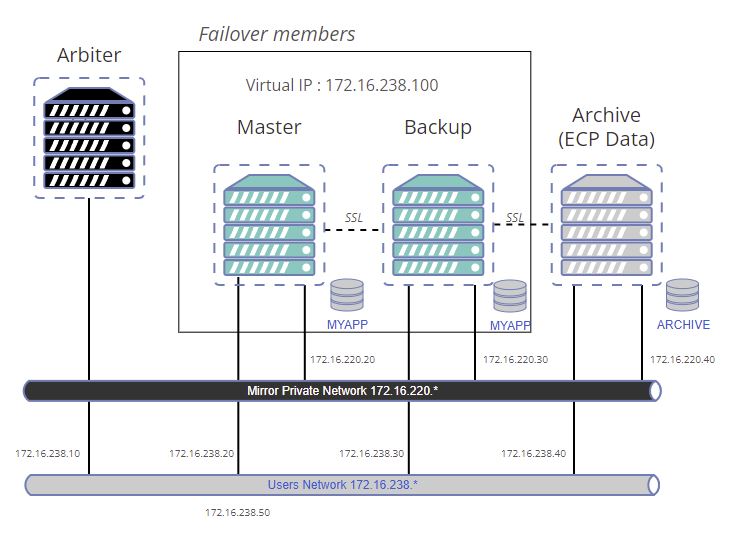

The previous test was very simple. In the real world, most often we work with the mirroring for the high availability and of course, global mapping could be a problem.

When we perform an archive process, global mapping is only set up on the primary instance.

To solve this problem a method helper NotifyBecomePrimary is available and must be called by the ZMIRROR routine.

ZMIRROR documentation link .

A sample that allows starting a containers architecture with failover members is available on this github page.

This sample is also a way to test copy to a remote database, you can see on the schema, our archive database will be a remote database (using ECP).

git clone https://github.com/lscalese/global-archiver-sample.git

cd global-archiver-sample

Copy your "iris.key" in this directory

This sample uses a local directory as a volume to share the database file IRIS.DAT between containers.

We need to set security settings to ./backup directory.

sudo chgrp irisowner ./backup

If the irisowner group and user do not exist yet on your system, create them.

sudo useradd --uid 51773 --user-group irisowner

sudo groupmod --gid 51773 irisowner

Login to Intersystems Containers Registry

Our docker-compose.yml uses references to containers.intersystems.com.

So you need to login into Intersystems Containers Registry to pull the used images.

If you don't remember your password for the docker login to ICR, open this page https://login.intersystems.com/login/SSO.UI.User.ApplicationTokens.cls and you can retrieve your docker token.

docker login -u="YourWRCLogin" -p="YourPassWord" containers.intersystems.com

Generate certificates

Mirroring and ECP communications are secured by tls\ssl, we need to generate certificates for each node.

Simply use the provided script:

# sudo is required due to usage of chown, chgrp, and chmod commands in this script.

sudo ./gen-certificates.sh

Build and run containers

#use sudo to avoid permission issue

sudo docker-compose build --no-cache

docker-compose up

Just wait a moment, there is a post-start script executed on each node (it takes a while).

Test

Open an IRIS terminal on the primary node:

docker exec -it mirror-demo-master irissession iris

Generate data:

Do ##class(lscalese.globalarchiver.sample.DataLog).GenerateData(10000)

If you have an error "class does exist", don't worry maybe the post-start script has not installed global-archiver yet, just wait a bit and retry.

Copy data older than 30 days to the ARCHIVE database:

Set lastId = ##class(lscalese.globalarchiver.sample.DataLog).GetLastId(30)

Set Global = $Name(^lscalese.globalarcCA13.DataLogD)

Set sc = ##class(lscalese.globalarchiver.Copier).Copy(Global, lastId, "ARCHIVE")

Delete archived data from the source database:

Set sc = ##class(lscalese.globalarchiver.Cleaner).DeleteArchivedData(Global,"ARCHIVE")

Check the global mapping

Great, now open the management portal on the current primary node and check global mapping for the namespace USER and

compare with global mapping on the backup node.

You should notice that the global mapping related to archived data is missing on the backup node.

Perform a stop of the current primary to force the mirror-demo-backup node to become the primary.

docker stop mirror-demo-master

When the mirror-demo-backup changes to status primary, the ZMIRROR routine is performed and the global mapping for the archived data is automatically set up.

Check data access

Simply execute the following SQL query :

SELECT

ID, AccessToPatientId, DateTime, Username

FROM lscalese_globalarchiver_sample.DataLog

Verify the Number of records, It must be 10 000 :

SELECT

count(*)

FROM lscalese_globalarchiver_sample.DataLog

Link - Access to portals

Master: http://localhost:81/csp/sys/utilhome.csp

Failover backup member: http://localhost:82/csp/sys/utilhome.csp

ARCHIVE ECP Data server: http://localhost:83/csp/sys/utilhome.csp

.png)