Awesome, this is exactly what I was looking for! Thank you. I was getting close with %Get and the iterator but wasn't quite making the connection with the whole line.LineNumber part.

Thanks!!!

- Log in to post comments

Awesome, this is exactly what I was looking for! Thank you. I was getting close with %Get and the iterator but wasn't quite making the connection with the whole line.LineNumber part.

Thanks!!!

Thanks for your answer as well! This is actually what we were already doing with 2015.1 when handling JSON. So moving into 2017.1, I was hoping to make more use of the built-in JSON handlers because the JSON response I posted was a rather simple one - some of our more complex responses has our string manipulations getting quite long-in-the-tooth.

Thanks for your answer, Robert. I had considered the option of using a 'Privileged Application' and after your post, have started down that path but that still leaves open my original question...

How do I determine what resource/privilege is required to execute this query? %Developer does not work.

Is there documentation I'm missing in the API reference or elsewhere that indicates the necessary permissions needed to make certain calls? I only wish to grant the minimum necessary but right now, only %All makes it work. Is there some sort of debug I can use to see what role it's running a $SYSTEM.Security.Check on when it tries to execute this query?

Thanks Robert, I understand. I ended up solving this another way anyway... I realized I did not have my REST Dispatch Web App sharing the same cookies path as my main web app so even though I was logging in with authorized credentials that should have no problem running this query, when I tried to execute it via REST I passed into another 'cookie domain' and lost the authorization header.

Once I fixed that setting, it works just fine for the users it should and I don't have to grant Unknown User elevated privileges, which is ideal.

Thanks again!

Not sure if your issue is the same I ran into when I got this error, but to solve I did the following:

1. In the Eclipse installer, click the 'Network' button (it's next to the ? Help button in the lower tool bar.)

2. Make sure your Active Provider setting is set to Direct. By default, the installer was set to Manual I think and after I changed to Direct, I was able to read the repository.

Thanks Nicholai! I swear I tried this in some iteration (I've re-written this a dozen different ways it feels like) but if it's working for you, clearly I haven't! I'll give it a go and mark your answer as accepted once successful.

Appreciate it!

Edit: Yep it worked. Knew it was something simple. Thanks again.

Has anyone had success using this or a similar solution running Health Connect/HealthShare on an AIX 7.2 platform? LibreOffice does not provide AIX-specific packages.

Appreciate the response but what I'm looking to do is convert RTF to PDF and then base64 encode it.

Does it need to be the 16.1 (or greater) XL C fileset (we have a lesser installed)? And just the runtime or the compilation as well? Verifying for our systems' guys.

While not truly extending Ens.Util.LookupTable, we have numerous cases where we have to handle multiple values against a single key.

Our solution was to identify a special character we would use as a delimiter of values (say @ for instance) and store them as such: value1@value2

We then have a custom CoS function that we can use to perform the normal lookup functions that parses the values and returns them as an array object that we can then use to pull out the value we need.

If you are simply after a pair of values, then the CoS is not even needed as you could use the built-in Piece function to rip apart the values using the delimiter.

Good morning - I reported this issue when we installed 2017.1.0 last summer.

They recently addressed it in 2017.1.2 (released in Nov 2017 I think?):

http://docs.intersystems.com/documentation/cache/releasenotes/201712/re…

Summary: Ensure All Matching Results Appear in Message Viewer

Description:

In the Message Viewer, users can filter the results which will be displayed by various criteria. However, when the search criteria included certain extended criteria and the selected Page Size was smaller than the total number of messages, some of the matching results were not displayed even if you navigated to the next page. This change ensures that all matching messages are displayed.

We updated our environments to 2017.1.2 and this issue no longer occurs.

Hope this helps!

-Craig

Good morning Eric -

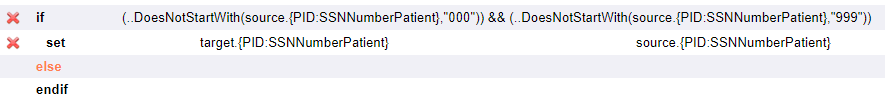

The logical operator for OR in a DTL is a double pipe - || - whereas an AND is a double ampersand - &&.

See: http://oairsintp.ssfhs.org:57772/csp/docbook/DocBook.UI.Page.cls?KEY=TCOS_Logical

So in your case, I'd recommend an IF/ELSE block

IF source.PROP45 = "whatever" || source.PROP45 = "whateverelse"

set target.{PD1.AdvanceDirectiveCode(1).identifierST} trueoption

ELSE

set target.{PD1.AdvanceDirectiveCode(1).identifierST} falseoption

There are also some built in functions the DTL editor provides to do it all in one line. It comes down to preference on readability, in my opinion.

EDIT - here's an example using the AND operator in the DTL (&&) but in much the same way, you could use an OR (||) operator.

In our research with the security team and Windows server team, the advisory indicates that there are additional options provided to require the TLS/SSL bind but it is not automatically turned on for existing servers. The InterSystems advisory above (and what was sent out via email) makes it sound like this will cause immediate failure on all existing configurations, but that doesn't appear to be the case after review. Is this correct?

We are based on AIX 7.2 so the other challenge is that we use a round-robin DNS hostname to access the AD LDAP servers (ldap.ourdomain.example) but the certs for the servers the round-robin passes off to are 3 different servers. InterSystems only provides a single field for a PEM cert. Will it accept a concatenated PEM cert containing the info for all three servers? We have had to use this approach previously for Java keystores authenticating through LDAP.

From your screenshot, I see you're using a custom message class ORMFARM.amplitudeHTTPRequest. Have you extended the class to include Ens.Request?

I recently had a similar project and to get the request and response message bodies to show in trace, I had to include Ens.Request and Ens.Response (respectively) in my class definition:

Class MyRequestClassExample Extends (Ens.Request, Ens.Util.MessageBodyMethods, %JSON.Adaptor)

{

Property Example As %String;

}

Thanks for your response - here is a snippet that doesn't compile:

.png)

ERROR: FAIS.DTL.MyDtlName.cls(Transform+11) #1011: Invalid name : '(source.Items.(i).ItemNumber=".1")' : Offset:21

You see it appears to be complaining about the reference to the source object (at least if I'm reading the error right... maybe I'm not!)

If I change line 3 (edit: and line 4 and 5 too, of course, as appropriate) to source.Items.GetAt(i).ItemNumber=".1", it works as it should and compiles and runs perfectly. But as you point out, the compiler should boil that down for me.

Here's the source:.png)

Good morning Gertjan -

Thanks for confirming my suspicions re: a potential bug. Yes, I'm aware of the availability of local variables, certainly not a new user of InterSystems' products hence my confusion when what I suspected should work, based on my prior experiences, did not.

Since the 'case' is a conditional and usually treated like a shorthand 'if' in compilers, I had thought the IRIS compiler would boil it down much the same

I will open a WRC incident to let them know of the issue and utilize a workaround for now. Many thanks for your help proving I wasn't crazy!

For anyone reading this in the future, this was acknowledged as a bug by the WRC - devchange JGM926. Scheduled for 2020.2 release (subject to change.)

Simple workaround, pointed out by Gertjan's answer, is to store the value to a local variable and use the local variable in the case statement.

.png)

Are there any plans to bring the FHIR capabilities to the EM channel - 2020.1.x? While I appreciate the difference between CD/EM models, these FHIR updates could be critical for those of us that have to stick to the EM model (particularly the FHIR R4 and FHIR Server changes) without needing to wait until a 2021.x release.

We had this exact scenario a year ago or so and the easiest solution for us, since we aren't using System Default Settings like Eduard mentioned, was to open up the production class in Studio and do a search/replace (Ctrl-H)

Search for: epicsup

Replace with epictst

Then save/compile. Note will take effect immediately (edit: trying to recall if we had to click the 'Update' button in the Production Monitor for the connections to disconnect from the old and reconnect to the new. Definitely verify in your production monitor that the connections established to their new destination.)

Good morning Sebastian -

I read your post yesterday and was hopeful someone from InterSystems might respond with the best practices here as I tackled this situation myself a few months ago and had similar questions - the documentation isn't explicitly clear how this should be handled but certainly there are tools and classes available within HealthConnect to rig this up. As there has been no other response, I'll share how I handled this. Maybe others will chime in if they know of a better approach.

For my need, I am working with a vendor that requires we call their OAuth API with some initial parameters sent in a JSON body to receive back a response containing the Bearer token to be used in other API calls.

To achieve this, I created a custom outbound operation for this vendor that extends EnsLib.REST.Operation and using an XData MessageMap, defined a method that would execute the API call to get the Bearer token from the vendor (with the JSON body attributes passed in as a custom message class) and then another method that would execute the other API call that would utilize the Bearer token and pass along the healthcare data defined for this implementation (using a separate custom message class.) XData MessageMap looks similar to this:

XData MessageMap

{

<MapItems>

<MapItem MessageType="MyCustomMessageClass.VendorName.Request.GetBearerToken">

<Method>GetBearerToken</Method>

</MapItem>

<MapItem MessageType="MyCustomMessageClass.VendorName.Request.SubmitResult">

<Method>SubmitResult</Method>

</MapItem>

</MapItems>

}Within that GetBearerToken method, I define the %Net.HttpRequest parameters, including the JSON body that I extract from the custom message class using %JSON.Adaptor's %JSONExportToString function, and execute the call. On successful status, I take the response coming back from the vendor and convert it into another custom message class (e.g. MyCustomMessageClass.VendorName.Response.GetBearerToken.)

From here, I simply need a way to use my custom outbound operation, define the values in the Request message class and utilize the values coming back to me in the response message class. For that, I created a BPL that controls the flow of this process. In my case, the Bearer token defined by the vendor has a lifespan that is defined in the response message so I can also store off the Bearer token for a period of time to reduce the amount of API calls I make on a go-forward basis.

Here is an example BPL flow showing the call-outs to get the Bearer token:

So you can see at the top I'm executing a code block to check my current token for expiration based on a date/time I have stored with it. If expired, I call out to my custom operation with the appropriate message class (see the Xdata block I showed) to get a new token and if successful, I execute another code block to read the custom response message class to store off the Bearer token and its expiration date.

From there it's just a matter of stepping into the next flow of the BPL to send a result that utilizes that Bearer token for its API calls. Each time this BPL is executed, the Bearer token is checked for validity (i.e. not expired) - If expired, it gets a new one, if not, it utilizes the one that was saved off and then the next part of the BPL (not shown), crafts a message for the SubmitResult part of the custom operation and inside that operation, SubmitResult utilizes the stored Bearer token as appropriate to execute its API call.

Hopefully I explained enough to get you going or basically echo what you were thinking of doing.

But I'd certainly be interested in hearing a better approach if there is one.

Regards,

Craig

Hi Sebastian - You are correct in that I implement it all directly in the Operation and the BPL handler with respect to getting the token (Handled in the Operation) and the storing of it (handled in the BPL.) I am not currently using any %OAuth framework functionalities though I have started to peek at them. They don't seem to match my use-case and perhaps it has something to do with how the vendor I'm working with has implemented their OAuth messaging.

My GetBearerToken function ends up looking something like this - below is any early working version. I have since cleaned things up and encapsulated a lot of the build-up of the %Net.HttpRequest object using a custom framework I developed but this will give you a general idea.

My custom message classes extends Ens.Request (or Ens.Response as appropriate) and %JSON.Adaptor. The properties defined within are aligned with what the vendor expects to receive or send back. For instance, when sending this request to the vendor, they typically expect a Client Id, Client Secret, and the Audience and Grant Type being requested for the token. My BPL defines all those properties dynamically before sending the call to the operation.

/// REST WS Method to fetch an environment specific OAuth Bearer Token.Method GetBearerToken(pRequest As MyCustomMessageClass.Vendor.Request.GetBearerToken, Output pResponse As MyCustomMessageClass.Vendor.Response.GetBearerToken) As %Status{// Endpoint for retrieval of Bearer Token from VendorSet tURL = "https://vendor.com/oauth/token"Try {Set sc = pRequest.%JSONExportToString(.jsonPayload)THROW:$$$ISERR(sc) $$$ERROR($$$GeneralError, "Couldn't execute object to json conversion")

// ..%HttpRequest is a reference to a class defined variable of type %Net.HttpRequest.

// Set HTTP Request Content-Type to JSONSet tSC=..%HttpRequest.SetHeader("content-type","application/json")// Write the JSON Payload to the HttpRequest BodyDo ..%HttpRequest.EntityBody.Write()S tSC = ..%HttpRequest.EntityBody.Write(jsonPayload)// Call SendFormDataArray method in the adapter to execute POST. Response contained in tHttpResponseSet tSC=..Adapter.SendFormDataArray(.tHttpResponse,"POST", ..%HttpRequest, "", "", tURL)// Validate that the call succeeded and returned a response. If not, throw error.If $$$ISERR(tSC)&&$IsObject(tHttpResponse)&&$IsObject(tHttpResponse.Data)&&tHttpResponse.Data.Size {Set tSC = $$$ERROR($$$EnsErrGeneral,$$$StatusDisplayString(tSC)_":"_tHttpResponse.Data.Read())}Quit:$$$ISERR(tSC)If $IsObject(tHttpResponse){// Instantiate the response objectS pResponse = ##class(MyCustomMessageClass.Vendor.Response.GetBearerToken).%New()// Convert JSON Response Payload into a Response ObjectS tSC = pResponse.%JSONImport(tHttpResponse.Data)}} Catch {// If error anywhere in the process not caught previously, throw error here.Set tSC = $$$SystemError}Quit tSC}Good morning Dmitriy - I'm not sure I 100% understand what you're asking but in my experience with %JSON.Adaptor, there is only one additional step you need to do to get the string into %DynamicObject:

Set tDynObj = {}.%FromJSON(output)While I agree it would be handy for %JSON.Adaptor to have a way to do this with one of their export methods, I think the intent may be to allow us to immediately take the JSON as a string to write it out to an HTTP Request body, which is where I use it most:

Set sc = pRequest.%JSONExportToString(.jsonPayload)

THROW:$$$ISERR(sc) $$$ERROR($$$GeneralError, "Couldn't execute object to json conversion")

// Set HTTP Request Content-Type to JSON

Set tSC=..%HttpRequest.SetHeader("content-type","application/json")

// Write the JSON Payload to the HttpRequest Body

Do ..%HttpRequest.EntityBody.Write()

S tSC = ..%HttpRequest.EntityBody.Write(jsonPayload)Not sure what you're asking regarding the ID of the object. Which object? If you're referring to a persistent message class that extends %JSON.Adaptor, there is %Id() but I haven't used it so not sure if that's what you're after or not.

Apologies as I haven't gotten into the whole Swagger generated API thing yet (working that direction though.) But to your desired output above, could you not do something like:

Set tRetObj = {}

Set tRetObj.article = {}.%FromJSON(article.%JSONExportToString())

Return tRetObjAgain, maybe I'm not fully understanding so I'll butt out after this reply and maybe someone else can help better. :-) I do see your concern re: MAXSTRING though and have encountered this concern myself. Though taking the export to string out of the return statement I think would allow you to handle that exception better.

I had the same challenges as you when I was tackling this - documentation wasn't really fleshing it out well enough. ISC Sales Engineer helped me work through it.

Here is what I have used with success to submit both an XML doc and PDF doc to a vendor along with two parameters associated with the request (ReportId and CustomerId.) Requires use of MIME Parts. I hope this helps you. I had to genericize some of the code to share but it is commented by me what each part does and how it pulls together.

Note this assumes you're passing in a variable pRequest that is a message class that holds your data. Also I am running this on 2019.1, not 2018 so not sure of the differences when using things like %JSONImport (may be none but I don't know that for certain.)

Set tURL = "fully_qualified_endpoint_url_here"

// Instantiate reportId MIME Part

Set reportId = ##class(%Net.MIMEPart).%New()

// Define/Set the Content-Disposition header indicating how this MIME part is encoded and what it contains.

// Final string looks like: form-data; name="reportId"

S tContentDisp1 = "form-data; name="_$CHAR(34)_"reportId"_$CHAR(34)

Do reportId.SetHeader("Content-Disposition", tContentDisp1)

// Get the ReportId from the incoming Request (from BPL) and write to the MIME Part body.

S tReportId = pRequest.ReportId

Set reportId.Body = ##class(%GlobalCharacterStream).%New()

Do reportId.Body.Write(tReportId)

// Instantiate customerId MIME Part

Set customerId = ##class(%Net.MIMEPart).%New()

// Define/Set the Content-Disposition header indicating how this MIME part is encoded and what it contains.

// Final string looks like: form-data; name="customerId"

S tContentDisp2 = "form-data; name="_$CHAR(34)_"customerId"_$CHAR(34)

Do customerId.SetHeader("Content-Disposition", tContentDisp2)

// Get the CustomerId from the incoming Request (from BPL) and write to the MIME Part body.

S tCustomerId = pRequest.CustomerId

Set customerId.Body = ##class(%GlobalCharacterStream).%New()

Do customerId.Body.Write(tCustomerId)

// Instantiate file1 (XML Structured Doc) MIME Part

Set file1 = ##class(%Net.MIMEPart).%New()

// Define/Set the Content-Disposition header indicating how this MIME part is encoded and what it contains.

// Final string looks like: form-data; name="file1"; filename="<pRequest.CaseNumber>.xml"

S tXmlFileName = pRequest.CaseNumber_".xml"

S tContentDisp3 = "form-data; name="_$CHAR(34)_"file1"_$CHAR(34)_"; filename="_$CHAR(34)_tXmlFileName_$CHAR(34)

Do file1.SetHeader("Content-Disposition", tContentDisp3)

// Get the XML as a Stream from the incoming Request (from BPL) and write to the MIME Part body.

Set tStream = ##class(%GlobalCharacterStream).%New()

Set tSC = pRequest.XmlDoc.OutputToLibraryStream(tStream)

Set file1.Body = tStream

Set file1.ContentType = "application/xml"

// Instantiate file1 (PDF Report) MIME Part

Set file2 = ##class(%Net.MIMEPart).%New()

// Define/Set the Content-Disposition header indicating how this MIME part is encoded and what it contains.

// Final string looks like: form-data; name="file1"; filename="<pRequest.CaseNumber>.xml"

S tPdfFileName = pRequest.CaseNumber_".pdf"

S tContentDisp4 = "form-data; name="_$CHAR(34)_"file2"_$CHAR(34)_"; filename="_$CHAR(34)_tPdfFileName_$CHAR(34)

Do file2.SetHeader("Content-Disposition", tContentDisp4)

// Get the PDF Stream from the incoming Request (from BPL) and write to the MIME Part body.

Set file2.Body = pRequest.PdfDoc.Stream

Set file2.ContentType = "application/pdf"

// Package sub-MIME Parts into Root MIME Part

Set rootMIME = ##class(%Net.MIMEPart).%New()

Do rootMIME.Parts.Insert(reportId)

Do rootMIME.Parts.Insert(customerId)

Do rootMIME.Parts.Insert(file1)

Do rootMIME.Parts.Insert(file2)

// Write out Root MIME Element (containing sub-MIME parts) to HTTP Request Body.

Set writer = ##class(%Net.MIMEWriter).%New()

Set sc = writer.OutputToStream(..%HttpRequest.EntityBody)

if $$$ISERR(sc) {do $SYSTEM.Status.DisplayError(sc) Quit}

Set sc = writer.WriteMIMEBody(rootMIME)

if $$$ISERR(sc) {do $SYSTEM.Status.DisplayError(sc) Quit}

// Set the HTTP Request Headers

// Specify the Authorization header containing the OAuth2 Bearer Access Token.

Set tToken = "set your token here or pull from wherever"

Set tSC = ..%HttpRequest.SetHeader("Authorization","Bearer "_tToken)

// Specify the Content-Type and Root MIME Part Boundary (required for multipart/form-data encoding.)

Set tContentType = "multipart/form-data; boundary="_rootMIME.Boundary

Set tSC = ..%HttpRequest.SetHeader("Content-Type",tContentType)

// Call SendFormDataArray method in the adapter to execute POST. Response contained in tHttpResponse

Set tSC=..Adapter.SendFormDataArray(.tHttpResponse,"POST", ..%HttpRequest, "", "", tURL)

// Validate that the call succeeded and returned a response. If not, throw error.

If $$$ISERR(tSC)&&$IsObject(tHttpResponse)&&$IsObject(tHttpResponse.Data)&&tHttpResponse.Data.Size

{

Set tSC = $$$ERROR($$$EnsErrGeneral,$$$StatusDisplayString(tSC)_":"_tHttpResponse.Data.Read())

}

Quit:$$$ISERR(tSC)

If $IsObject(tHttpResponse)

{

// Instantiate the response object

S pResponse = ##class(Sample.Messages.Response.VendorResponseMsgClass).%New()

// Convert JSON Response Payload into a Response Object

S tSC = pResponse.%JSONImport(tHttpResponse.Data)

}If using a pre-built outbound operation:

.png)

Those are the key settings (the checkbox is what you are asking about.)

In code on the %HttpRequest object, you're looking for

Set ..%HttpRequest.SSLConfiguration = "Default" (or whatever your SSL config name is)

Set ..%HttpRequest.SSLCheckServerIdentity = 1 (for true, 0 for false.)

Your error appears to deal more with authentication/authorization (HTTP 403) as I think SSL handshake failures throw a different status code but tinker with the settings above.

I'm not really clear on what you mean by "standard Module.int" so sounds like we may be approaching this in different ways and I apologize for any confusion I caused.

%HttpRequest is %Net.HttpRequest (you can find syntax for SetHeader here) and the Adapter in this case refers to the adapter attached to the EnsLib.REST.Operation class via Parameter, which in this case is EnsLib.HTTP.OutboundAdapter.

I like your idea of handling this with an extended process - something I had not yet considered. I personally handle this by extending Operations (EnsLib.REST.Operation) to handle specific message types (XData blocks) and then use standard BPLs to manage the appropriate callouts/responses to determine what to do next.

But generally speaking, Processes can be extended with a custom class as well by extending Ens.BusinessProcess and implementing overrides to OnRequest/OnResponse. There's more documentation here:

Defining Business Processes - Developing Productions - InterSystems IRIS Data Platform 2020.4

Interested to hearing how you end up addressing this and suggestions others have as well. I feel like there are many ways to tackle this common need (REST API workflows) so probably several approaches I had not considered.

Thanks Oliver - Could you speak to the workload of your Health Connect production? i.e., is it for traditional HL7-based integration between EMRs/clinical apps/etc for a healthcare org?

And if so, what are your thoughts/experiences with the egress/ingress traffic costs? Does most of your traffic exist in the same cloud to mitigate that or are you living in the hybrid cloud world where a lot of traffic is still going to an onprem data center?

Appreciate your thoughts (and anyone else's) on this as we've been exploring it as well.

Appreciate the info - we do supply chain stuff as well for our org so I can relate to the message sizes there tend to be a lot smaller and infrequent than say an ADT and/or base64 PDF ORU result feed.

It's certainly not that cloud can't scale to support it - definitely can - but if our clinical document imaging is on-prem and we're bouncing those PDF ORUs between on-prem and cloud, bandwidth usage/cost becomes a significant factor especially given that's just a couple integrations and we have around 400.

It'll be interesting to see how much this factors in long term to cloud plans.

Your version is quite a bit older than the one I use but I can say this works for me. I'm not sure if it's technically supported in the sense that a future upgrade might break it but, like you, I needed this at the top of a process instead of having separate classes for each source config (ew) that were otherwise mostly identical.

..%Process.%PrimaryRequestHeader.SourceConfigNameSo tread carefully if you use it.

EDIT: Found in our historical documentation that one of the methods I wrote for a Ens.Util.FunctionSet was also able to get to it this way and it was using a version around 2017.1 at the time:

Set SourceConfigName = %Ensemble("%Process").%PrimaryRequestHeader.SourceConfigName